Introduction

Large Language Models have taken the world by storm. With the entry of ChatGPT, GPT3, Bard, and other Large Language Models, developers are constantly working with these models to create new product solutions. With each new day comes a new Large Language Model or new versions of existing LLMs. Keeping up with these new versions or new Models can be problematic because one has to go through the documentation of each such Large Language Model. LangChain, a library that wraps around all the different LLMs, makes things easier. Moreover, LangFlow, a UI based on LangChain, was introduced to directly work with and create applications, making things much better.

Learning Objectives

- To understand LangFlow UI

- To install and work with LangFlow

- To learn the inner workings of LangFlow

- Using LangFlow to create applications

- Share the applications made through LangFlow

What is LangFlow and Why LangFlow?

LangFlow is a Graphical UI that is based on the Python Package LangChain designed with react-flow. LangChain is Python Package that works to create applications with Large Language Models. It constitutes of different Components like Agents, LLMs, Chains, Memory, and Prompts. Developers chain these blocks together to create applications. LangChain contains wrappers for almost all the popular Large Language Models. Now to use LangChain, one must write the code to create applications. Writing code sometimes can be time-consuming and even error-prone.

This is where LangFlow fits in. It’s a graphical UI based on LangChain. It contains all the Components that come in LangChain. LangFlow provides drag and drop feature, where you can drag Components onto the screen and start building applications from Large Language Models. It even contains rich examples for everyone to start with. In this article, we will go through this UI and see how to build applications with it.

Let’s Start with Lang flow

Now, we have looked at what is LangFlow, and how it fits in, let’s dive into it to get a better understanding of its functionality. LangFlow UI is available for both Javascript and Python. You can take one out of it and start working with it. For the Python version, one needs to have Python in their system, and the LangChain library.

If you want to work with LangFlow, you need the below Packages

The above will install the Lang flow Package and the LangChain Package. Now to start up the UI, use the following command

python -m langflow

(or)

langflowNow the LangFlow UI is up and running. It is running on the local host on Port Number 7860. This way you can install and start working with LangFlow.

The makers of LangFlow i.e. the LogSpace AI have directly deployed LangFlow on HuggingFace Website. You can click here to visit the HuggingFace website or search Google. It will look something like the one below, then click on New Project.

When you click on Create New Project, the LangFlow UI will appear.

Here the white space is where we will create the applications by dragging the Components. And the comps are present on the left side (Agents, Chains, Loaders, etc). So when you click on Comps, you are presented with different types, from which you will be selecting one and drag it to the white space. In the white space, you will then combine different Comps to make up the entire application.

Understanding the LangFlow UI

In this section, we will briefly understand the UI of LangFlow, the Elements present it, and how the UI works, to get a better understanding of how to build Large Language Applications quickly with LangFlow

In the above pic, we see the top right section of the LangFlow UI. The icons’ meanings can be understandable. The second icon represents the Export option. So when we build an application through LangFlow, and now we want to download it to our local machine. Then we can do so by converting our application config to a JSON file. Clicking on the Export option will let you download the JSON file that contains the information of your application. Now if your friend wants to build the same application, he can just click on the first option which is Import, and then pass your JSON file to it.

The third option converts your application to a Python Code, which you can then work with directly in your local system, instead of going to the LangFlow website every time you want to use that application.

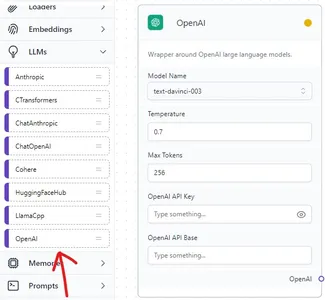

LangChain has wrappers for many Large Language Models. Each Large Language Model has its API to work with. So when try to work with an LLM in the LangFlow, it provides us the option to add the API Key which can be seen above. Along with that, we even have the option to select the type of Large Language Model we want to work with.

Building a Simple Chat Application with LangFlow

In this section, we will be creating a simple chatbot application working with the LangFlow UI. This application involves working with OpenAI Large Language Models, so an Open API Key should be present to work with this application. The application we will create is a chatbot that will answer to user questions funnily.

So the application we will be creating will have 3 elements, the Large Language Model, the Prompt Template, and the LLM Chain that connects them. Let’s first start by dragging LangChain’s OpenAI wrapper onto the dotted section in the UI.

OpenAI Large Language Model Wrapper

The Open AI Large Language Model wrapper can be found in the LLM section of LangFlow. We can drag it to the white section. Here we now select the type of model we want to work with. For now, I will set it to Davinci mode. And the temperature, i.e. how creative the model should be, is set to 0.7 and max tokens to 256. In the field Open API Key, we need to provide the Open API Key, and the field below that can be left blank.

From the pic, we see that we have dragged the Prompt Template to the white section. Here the application we are developing is a simple bot that replies funnily, so we have to write the Prompt template accordingly.

Prompt Template

You are an AI bot that responds to each query given by the Human in a funny way.

Human: {query}

AI:

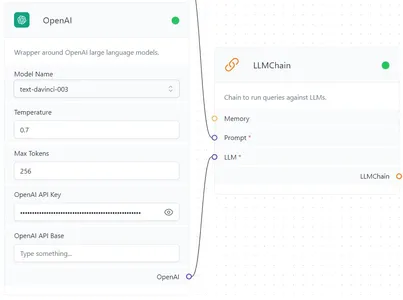

Now come to the last element, which is the Chain element. The LLM Chain is the one combines that is chains our OpenAI Large Language Model and the Prompt Template together. We can see that the LLMChain can be found in the Chains section to the left side of the UI. Finally, it’s time to chain all the things together.

Like the pics above, connect the Prompt Template to the Prompt of the LLMChain and connect the OpenAI end to the LLM end of LLMChain.

Now time to test our application. For the application to run, click on the Thunderbolt Icon present on the bottom right part of the UI. If the OpenAI key is valid, then on each of the Elements, on the top, near the elements name, a green dot will be displayed (initially its yellow) then you will be seeing a Blue Chat Icon below the Thunderbolt Icon. Click on the Blue Chat Icon.

After clicking on it, a chat window will open for you to chat. Now you can chat with the Large Language Model that we have given in the UI. Let’s try asking the bot “How are you?”, for this, the bot needs to give a response funnily.

We can see that the bot indeed has responded funnily by following our Prompt Template. Share with anyone by just exporting it to the JSON. This is just one of the sample use cases of what we can achieve with LangFlow and LangChain.

Conclusion

LangFlow UI is built on top of Python’s LangChain Framework, a Package that’s extensively worked with to create applications with Large Language Models. We have seen the Components in the UI, and have seen how one can build models with the UI and share them with another person by exporting them to a JSON file. With this UI, the possibilities to create high-end applications are endless.

Key Takeaways

- LangFlow provides an easy drag-and-drop feature to build applications with LLMs

- LangFlow UI is available in both Python and JavaScript

- This UI allows users to convert their application to JSON files, thus making them easy to share

- LangFlow even comes with a Python package, which the user can install and run the application built in LangFlow by providing the path to the JSON file

- LangFlow makes it easier for non-developers to build applications with Large Language Model

Frequently Asked Questions

A. LangChain is a Python Package to build applications with Large Language Models. It contains a set of components chained together to create these applications.

A. While LangChain is a Framework providing tools for developing applications with Large Language Models. LangFlow is a UI built on top of LangChain, to make the development process smoother with a No Code Low Code concept.

A. Python and JavaScript programming languages support LangFlow. One can work with it directly through the HuggingFace website.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.